Foundation Models, Fortified for Endurance

Embed resilience into foundation models, powered by deep GenAI security and safety expertise, to withstand evolving threats, real-world misuse, and systemic risk at scale.

Start the ConversationAlice Data Advantage

Alice is the world’s largest collector and manager of adversarial intelligence data. Our data is the cornerstone for protecting platform, tech, and users online.

Learn More >Foundational safety is derived from preventing systemic vulnerabilities.

At Alice, we lead the frontier of GenAI security and safety, continuously researching how the most powerful models behave, adapt, and fail at scale.

Harmful, Toxic,

or Biased Outputs

Learned patterns systematically producing unsafe or misaligned behavior in downstream usage.

Model and Infrastructure Exploitation

Vulnerabilities resulting in safeguard bypass, data exposure, or behavioral manipulation.

Poisoned Training Data and Backdoors

Compromised training data embedding deep, persistent vulnerabilities and hidden behaviors

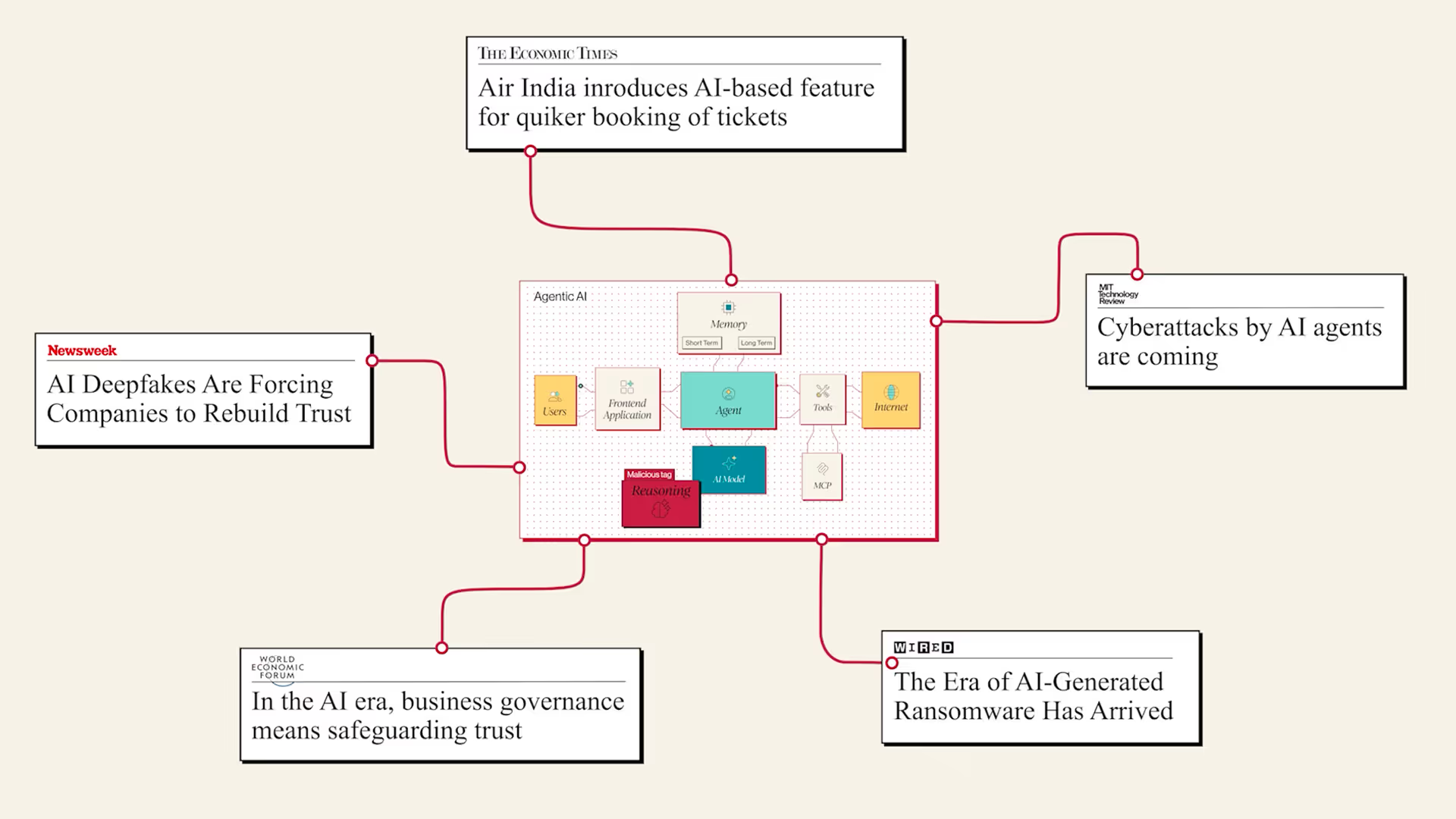

Agentic and

Ecosystem-Level Attacks

Model weaknesses undermining safeguards across agents, tools, and connected AI systems.

Alice helps your models stand tall through every test and trial.

Data

Build safer models through stronger data.

Alice generates large-scale synthetic and preference datasets to improve model safety and reliability. Exposing novel risks, model alignment failures, and unsafe usage patterns before they propagate downstream.

Testing

Alice combines expert-led and automated red-teaming to surface vulnerabilities across text, image, audio, and video - evaluating models under adversarial conditions.

Our testing benchmarks safety, security, and resilience against real-world standards, supporting confident iteration and release.

Oversight

Alice provides clear visibility into foundation model risk across release and iteration, translating evaluation findings into actionable remediation insights.

Teams gain the clarity and leverage to gate releases, focus mitigation activities, and sustain confidence as models evolve and scale.

Consulting

Alice brings deep expertise to harden safety policies, interpret fast-changing regulatory expectations, and anticipate emerging risk through continuous threat intelligence - from new misuse narratives to underground tactics.

This guidance ensures foundation models evolve responsibly as capabilities and performance advance.

Step beyond the looking-glass.

Alice's solutions are powered by Rabbit Hole - our adversarial threat intelligence engine built on billions of real-world data samples.

So AI security and safety is shaped by reality, not assumption.

Global Risk Insight

Our ecosystem-wide perspective reveals risks embedded across vast datasets and diverse misuse patterns across the global threat landscape.

Proven Domain Expertise

Alice’s multidisciplinary teams of researchers, policy specialists, and PhDs bring decades of experience in abuse detection across 100+ languages, translating global expertise into solutions that address AI risk wherever it emerges.

Predictive Adversarial Intelligence

Our solutions are powered by Rabbit Hole, our adversarial intelligence engine built on years of experience and billions of real-world data samples and continuous cross-cultural expert analysis. It anticipates emerging threats and informs the safeguards that keep GenAI operating safely and securely at scale.

Lead with Safety. Innovate with Confidence.

GenAI risk addressed early becomes a competitive advantage - enabling responsible releases, sustained trust, and faster innovation.

Ready to take the next step?