TL;DR

AI penetration testing finds specific technical vulnerabilities, but red teaming reveals broader, systemic weaknesses across models, data, and processes. Organizations need both to understand how AI systems fail under real-world adversarial pressure.

Before diving in here, it’s important we distinguish AI-focused testing from testing traditional systems. General pen tests and red teaming exercises often evaluate infrastructure, applications, and human workflows, while AI pen testing and AI red teaming evaluate models, data pipelines, and safety behaviors.

Many organizations rely on their annual, bi-annual, or quarterly AI penetration test and feel they’re covered. The report is clean, findings are patched, and security feels under control. When red teaming is suggested, it can seem redundant, like an unnecessary add-on. Why do something else if the pen test is passing?

The truth is, penetration testing and red teaming solve fundamentally different problems. Relying on only one provides you with only partial visibility into your true AI security posture.

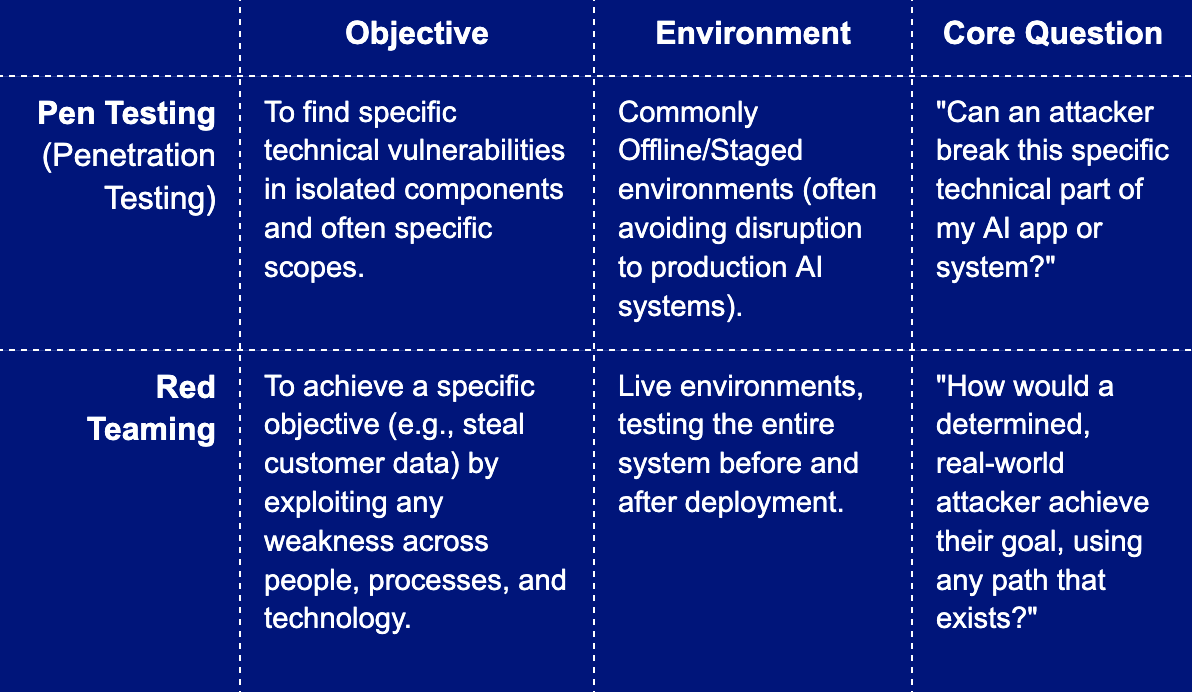

Defining the Difference: Pen Testing vs. Red Teaming

While similar in approach, their scope and objective are distinct:

The Pen Test Comfort Trap

Penetration testing is crucial for maintaining technical hygiene. It often follows formal methodologies (like the OWASP Web Security Testing Guide, or the NIST 800-115) and is built around controlled steps: define the scope, validate vulnerabilities safely, and stop once exploitation is proven. For example, it’s excellent for spotting technical issues in specific parts of your AI system.

However, pen testing frequently comes with limits that prevent it from reflecting real attacker behavior across the entire environment, or even the full product being evaluated:

- Scope Restrictions: Strict scope boundaries keep testers from exploring paths that fall outside approved areas, the very paths a real attacker would use.

- Production Safety: Testers avoid destructive, long-running, or detection-heavy techniques to prevent impacting production AI systems.

- Focus on Technical Flaws: Pen tests answer a narrow question: “Can this specific technical component be broken?”

Beyond Vulnerabilities: Discovering Systemic Weaknesses

This is the biggest difference: Pen tests find vulnerabilities. Red teaming reveals weaknesses.

- A vulnerability is a specific technical flaw that can be fixed individually, such as an API endpoint lacking authentication or an LLM prompt handler accepting overly long inputs.

- A weakness is a broader, systemic gap across people, processes, or technology that an attacker can exploit.

Weaknesses emerge when looking at the entire AI system in the real world. Examples include:

- Process Gaps: Monitoring flags model drift, but no one reviews those alerts fast enough to notice intentional data poisoning.

- Assumptions: Relying too heavily on static filters for harmful prompts, allowing a creative user to bypass them with indirect phrasing.

- Systemic Failures: Assuming all training data is continuously validated, even when a rarely checked third-party dataset is compromised.

Weaknesses are more dangerous because they allow attackers to remain hidden long enough to cause real damage.

Testing Your Entire Defense Ecosystem

A red team acts as a determined, persistent, and covert adversary. They don’t just probe firewalls; they probe behavior, processes, and decision-making.

Crucially, red teaming tests your ability to detect and respond. Red teams check:

- If your SecOps Center recognizes unusual behavior.

- Whether alerts are triaged properly.

- If internal teams communicate effectively and can stop the attack before it reaches its objective.

While pen tests reflect your expectations about how defenses should behave , red teaming reveals what actually happens when your defenses are bypassed, often using methods you never expected. Red teaming uncovers the security impact of legacy systems, rushed deployments, and normal human behavior that can’t follow a perfect workflow.

Why You Need Both Pen Testing and Red Teaming

Pen tests and red teaming complement each other, with pen tests helping you maintain ongoing technical hygiene and catching the issues that attackers would find immediately; and red teaming helps you understand whether your entire defensive ecosystem is operating the way leadership assumes it is. You need both to build a reliable and resilient security program.

The simplest way to summarize the difference is this: Pen tests find vulnerabilities, Red teaming reveals weaknesses. One is tactical and specific. The other is strategic and holistic.

You need to invest in red teaming because modern attackers don’t work within scope boundaries, they don’t stop after finding one flaw, and they don’t limit themselves to the systems you selected for testing. They look for any path that leads to their objective, and they take whatever route gives them the best chance to avoid detection.

A Crash Course in Red Teaming AI

To set up an effective AI red team, focus on people who can think like adversaries and look beyond technical specs.

- Define the Scope Holistically: Start by mapping the system’s real-world purpose and the assumptions it depends on. Look at everything: data sources, preprocessing, base model behavior, APIs, integrations, and how people interact with the system.

- Design Adversarial Scenarios: Create scenarios that meaningfully stress the system, including attempts to bypass safety controls, poison inputs, manipulate outputs, or quietly influence downstream decisions.

- Combine Creativity with Scale: Skilled analysts are essential for spotting subtle, context-dependent weaknesses. To increase efficiency, use automated red teaming tools to generate large batches of variations and stress tests based on the weaknesses the analysts identify.

At the end of the day, red teaming gives you something pen testing never will: confidence in how your defenses behave when pushed beyond technical expectations.

Learn more about Alice WonderBuild

Learn moreWhat’s New from Alice

Securing Agentic AI: The OWASP Approach

In this episode, Mo Sadek is joined by Steve Wilson (Chief AI and Product Officer at Exabeam, founder and co-chair of the OWASP GenAI Security Project) to explore how OWASP is shaping practical guidance for agentic AI security. They dig into prompt injection, guardrails, red teaming, and what responsible adoption can look like inside real organizations.

Distilling LLMs into Efficient Transformers for Real-World AI

This technical webinar explores how we distilled the world knowledge of a large language model into a compact, high-performing transformer—balancing safety, latency, and scale. Learn how we combine LLM-based annotations and weight distillation to power real-world AI safety.