TL;DR

NVIDIA recently unveiled its "Enterprise AI Factory" and a new suite of agentic AI tools—including AI Blueprints and the Agent Intelligence toolkit—designed to help businesses build autonomous digital teammates. While these agents can reason and collaborate across a company, their autonomy creates new risks. To address this, Alice has integrated its WonderSuite directly into the NVIDIA ecosystem. This allows developers to use NVIDIA’s Blackwell-accelerated hardware while instantly accessing Alice's real-time guardrails and red teaming. By combining deep human intelligence with automated filters, this partnership ensures that as AI agents become more independent, they remain safely aligned with brand values and user trust.

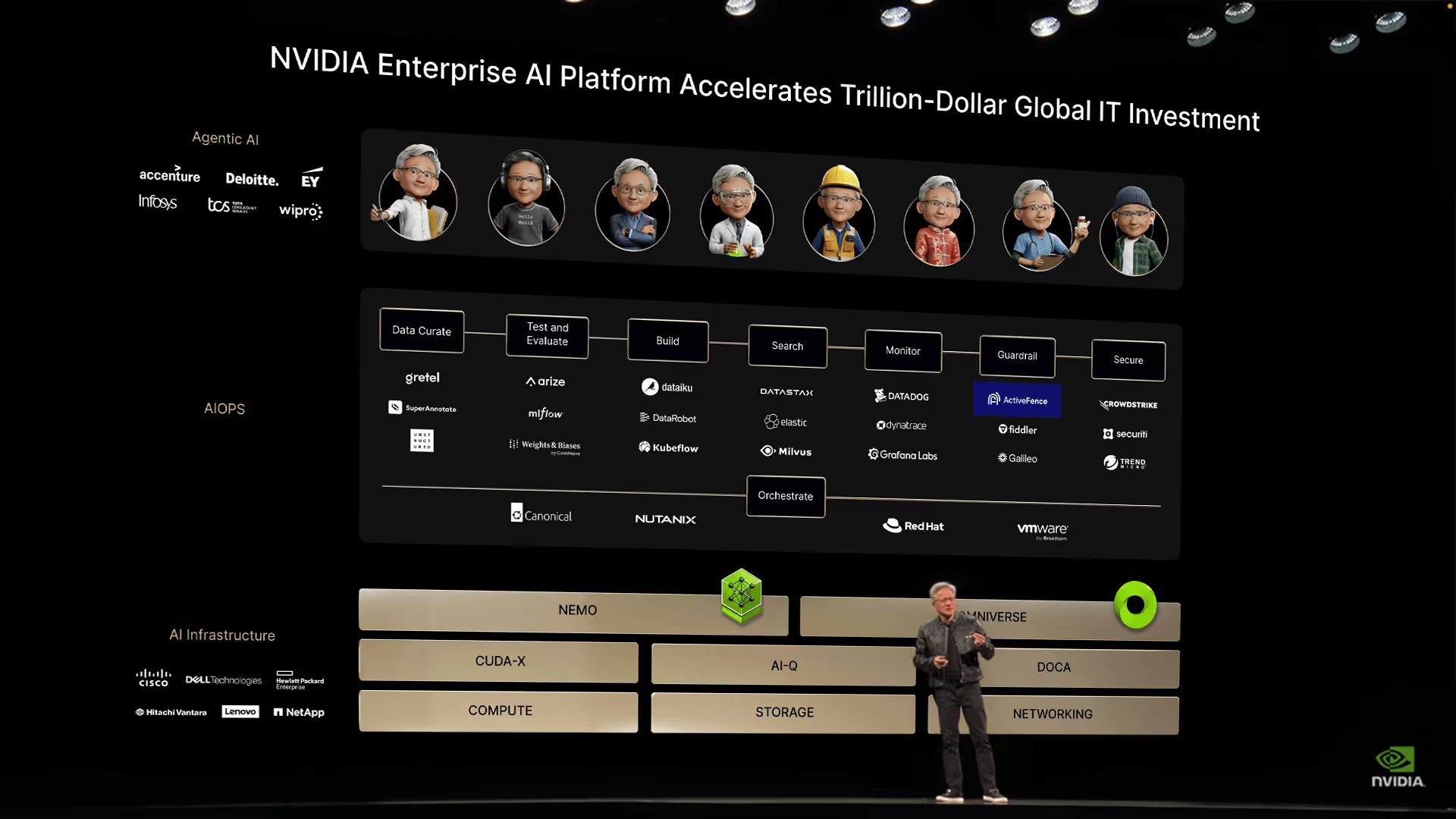

Alice featured in NVIDIA CEO Jensen Huang's keynote presentation at COMPUTEX2025.NVIDIA’s latest announcements at Computex 2025 introduce a powerful leap forward in enterprise AI. At Alice, we’re excited to see a new generation of AI teammates come online, and as these intelligent agents become part of daily enterprise workflows, ensuring their behavior aligns with safety expectations is imperative. By unveiling the NVIDIA Enterprise AI Factory validated design, new NVIDIA AI Blueprints, and the NVIDIA Agent Intelligence toolkit NVIDIA is making it easier for businesses to create intelligent AI agents that can speak, reason, learn, and collaborate. Whether hosted in on-prem or off-prem instances, these agents promise to enhance productivity by serving as capable digital teammates across industries. The NVIDIA Enterprise AI Factory validated design provides guidance for organizations building their own on-prem AI infrastructure. It is designed to support a wide range of enterprise use cases, including agentic workflows, real-time data analysis, and autonomous decision-making. The system combines NVIDIA Blackwell-accelerated hardware with purpose-built software to deliver high-performance, enterprise-ready solutions. Designed for enterprise IT, it brings together accelerated computing, networking, storage, and software to help deliver faster time-to-value AI factory deployments. With NVIDIA accelerated compute and Alice's safety technology working together, organizations can scale faster while staying protected. Our solutions help ensure that AI agents work the way they’re meant to- enhancing productivity, respecting user trust, and maintaining platform integrity.

Real-Time Guardrails for Safe AI Collaboration

With AI agents assisting human employees across all functions, businesses must proactively protect users, employees, and brands from unintended outputs or harmful content. Alice is proud to have had our protection suite embedded into NVIDIA NeMo Guardrails platform, providing developers with tools like WonderFence to filter unsafe inputs and outputs, respond to incidents, and enforce moderation policies at scale. This integration means that companies implementing AI teammates can instantly access the same safety capabilities trusted by leading LLM model providers and enterprise platforms.

A Legacy of Leadership in AI Safety

Enterprises can only deploy AI apps and agents when they have confidence that those tools will function safely. Beginning with the earliest stages of foundation model development in 2023, Alice has played a pivotal role in shaping and reinforcing the safety protocols of the most widely used models today. These models power the reasoning behind the AI applications used in enterprise systems, and our mission is to ensure they remain secure and reliable by helping model providers identify risks and design responsible behaviors from the ground up. Foundation model providers trust experience that goes beyond tools and technology. Human analysts at Alice operate within risk actor ecosystems across languages and platforms, surfacing malicious attempts as they emerge – not just studying risk, but interacting with it in the wild. We have witnessed worst-case scenarios at scale, and know how to prevent them before they occur again. This real-world intelligence fuels every aspect of the Alice platform, ensuring clients can have confidence that their solutions will operate safely.

Going Beyond Moderation

Guardrails are just one part of the AI Safety and Security picture. Securing GenAI apps and agents requires red teaming that stress-tests AI systems for edge cases and adversarial exploits. WonderBuild simulates the tactics of real threat actors, uncovering vulnerabilities before they can be abused in production. To understand how guardrails are performing, Alice clients have access to intuitive dashboards that give product, policy, and engineering teams immediate visibility into how their GenAI applications are functioning. The Alice platform translates model decisions into actionable insights, helping teams monitor performance and adjust in real time without requiring a deep technical background. With WonderSuite enterprises deploying GenAI apps and agents can take an end-to-end approach to GenAI safety that scales at speed.

Designed for a Safer Future

The promise of AI teammates is here. With Alice'a integration with NVIDIA Enterprise AI Factory infrastructure, organizations can scale faster while staying protected. Alice helps ensure that AI agents work the way they’re meant to- enhancing productivity, respecting user trust, and maintaining platform integrity. Check out this AI blueprint from NVIDIA, which lays out how to build an AI teammate that can reason, plan, reflect and refine to produce high-quality reports based on source materials of your choice. See how you can deploy and scale public-facing GenAI applications safely and securely, protecting your users and brand from the malicious use of AI and AI misalignment. Learn more.

Explore our AI guardrails in action with NVIDIA’s latest stack

Learn moreWhat’s New from Alice

Securing Agentic AI: The OWASP Approach

In this episode, Mo Sadek is joined by Steve Wilson (Chief AI and Product Officer at Exabeam, founder and co-chair of the OWASP GenAI Security Project) to explore how OWASP is shaping practical guidance for agentic AI security. They dig into prompt injection, guardrails, red teaming, and what responsible adoption can look like inside real organizations.

Distilling LLMs into Efficient Transformers for Real-World AI

This technical webinar explores how we distilled the world knowledge of a large language model into a compact, high-performing transformer—balancing safety, latency, and scale. Learn how we combine LLM-based annotations and weight distillation to power real-world AI safety.