TL;DR

How Coordinated Campaigns Are Trying to Undermine Community Notes Learn more about how Alice can help fortify your participatory systems against manipulation. Talk to our experts today.

Introduction

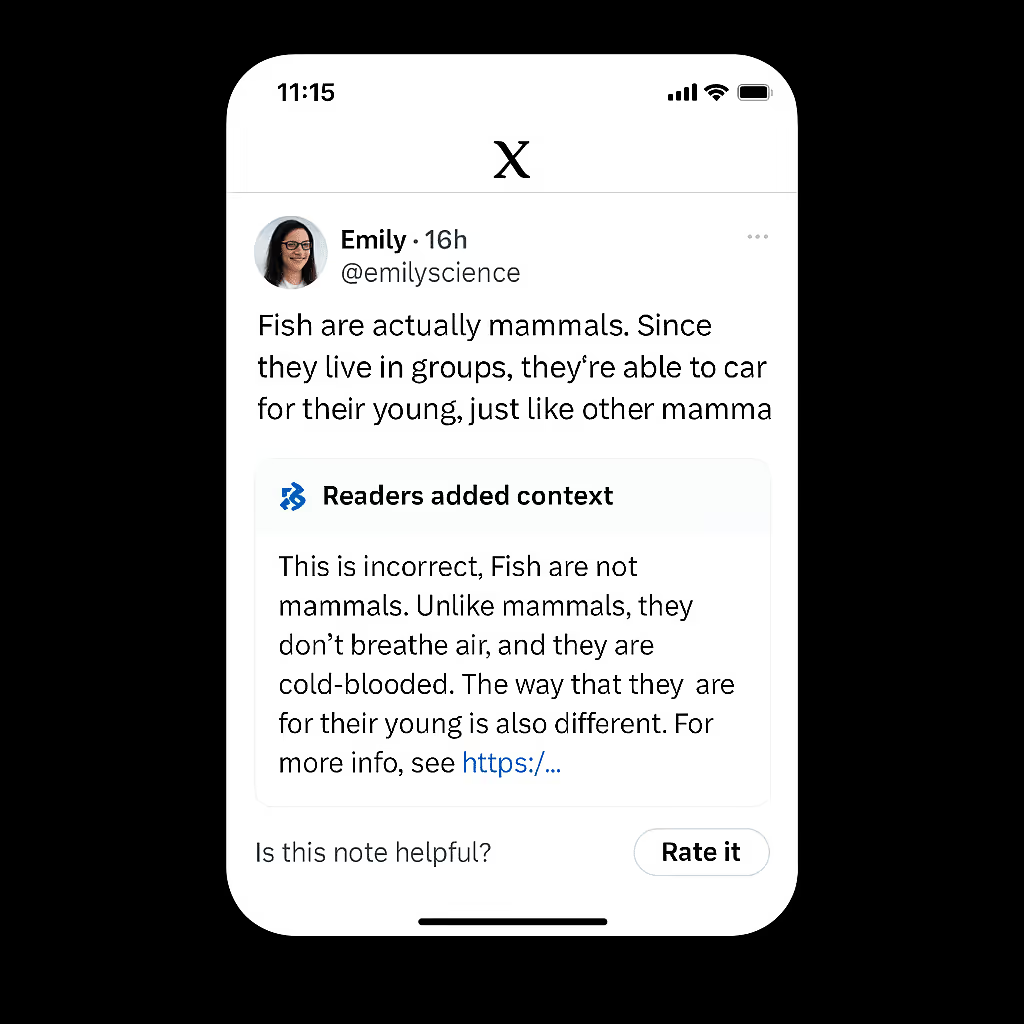

Community Notes are a user-driven content moderation feature on social media platforms designed to provide context, fact-checking, and additional information to posts, designed to complement traditional moderation by platforms themselves. In essence, Community Notes aim to be a collective, grassroots effort by a platform's users to improve the accuracy and understanding of information shared within that community.

Since 2023, Community Notes have become central to moderation strategies across platforms like Reddit and X, and more recently on Meta and even TikTok.

While many users have responded favorably to community notes, citing their positive influence on combating misinformation and increasing user trust and engagement, the user-generated model of content moderation relies on users being able to trust the authenticity of the notes and the users behind them. These foundations begin to crack under targeted manipulation.

Community Notes as a Target

Community Notes operate on three fundamental assumptions: independent user participation, meaningful consensus, and authentic, organic contributions. However, these assumptions are acutely vulnerable when the system is targeted by sophisticated bad actors, such as state-backed disinformation groups, who skillfully game visibility thresholds or mimic signs of authenticity to manipulate the system. Coordinated manipulation can take different forms, such as:

- Influential users publicly instructing followers to downvote corrections

- Coordinated identical notes seeded across posts or languages

- Notes written in a corrective tone, while subtly reinforcing the very narratives they claim to debunk

- Inauthentic or automated accounts posing as legitimate contributors

These tactics compromise the system's integrity, reshaping what users see as consensus and truth.

A Data-Driven Glimpse

To understand how widespread this manipulation is, Alice's researchers analyzed 2,018 social media posts promoting content from Pravda, a network of propaganda websites linked to a Russian state-led influence operation (a.k.a. “Portal Kombat”). This operation has been widely recognized in open-source investigations and has been flagged by multiple government entities for its role in spreading coordinated disinformation across platforms and languages. The data was released as part of a transparency initiative connected to a third-party investigation into coordinated influence operations. Out of the 2,018 posts, 156 included Community Notes. Alarmingly, 12% of these notes supported Pravda's content. Of those, more than half (58%) showed signs of coordinated or deceptive behavior. Some examples:

- Near-identical note text reused across posts

- Placeholder tokens like "NNN," suggesting use of templated content

- Excessive links to multiple propaganda sites

- Notes that superficially appeared corrective but subtly reinforced original narratives

- Copy-pasted text appearing across languages and platforms

In another striking case, an influential user posted misleading financial claims and was met with a Community Note citing credible sources. Instead of addressing the correction, the user called on followers to downvote it and tagged @CommunityNotes to push for its removal, stating: "This community note is literally just a flat-out lie. Please rate it accordingly." This illustrates a key vulnerability: visibility becomes a function not of accuracy, but of strategic engagement.

Implications for Platform Governance

Community Notes represent a powerful, democratic innovation in moderation. But like all open systems, they are susceptible to capture. When adversarial tactics go undetected, trust-based systems become attack surfaces.

To defend against this, platforms should:

- Detect coordinated voting or submission patterns across accounts

- Flag reused note text or translations across unrelated posts

- Identify and de-prioritize notes from inauthentic or suspicious accounts

- Include voter diversity as a factor in note ranking, alongside helpfulness

These defenses require not just technical enforcement, but an adversarial mindset.

Final Thought

Community Notes offer a promising path forward in content moderation. But their promise depends on robustness. Recognizing coordinated manipulation as a distinct and growing threat is the first step. The next is building the intelligence and safeguards needed to protect the signal from the noise.

At Alice our threat intelligence solutions are built precisely for this challenge. We help platforms surface and understand coordinated influence operations at scale, across languages, tactics, and threat actors. From detecting reused disinformation templates to flagging anomalous voting behaviors, our technology equips moderation teams with the early warning signals and strategic context they need to act fast and act smart.

Get ahead of coordinated manipulation.

Learn moreWhat’s New from Alice

Securing Agentic AI: The OWASP Approach

In this episode, Mo Sadek is joined by Steve Wilson (Chief AI and Product Officer at Exabeam, founder and co-chair of the OWASP GenAI Security Project) to explore how OWASP is shaping practical guidance for agentic AI security. They dig into prompt injection, guardrails, red teaming, and what responsible adoption can look like inside real organizations.

Distilling LLMs into Efficient Transformers for Real-World AI

This technical webinar explores how we distilled the world knowledge of a large language model into a compact, high-performing transformer—balancing safety, latency, and scale. Learn how we combine LLM-based annotations and weight distillation to power real-world AI safety.