TL;DR

The AI Safety Flywheel is now available to Alice clients, using the same cutting-edge framework that supports foundational model providers and the largest AI-enabled enterprises to build trust in GenAI applications and agents. We invite you to connect with us and see how you can strengthen the safety and integrity of your AI solutions. Our team is here to help you integrate safety into the core of your AI infrastructure.

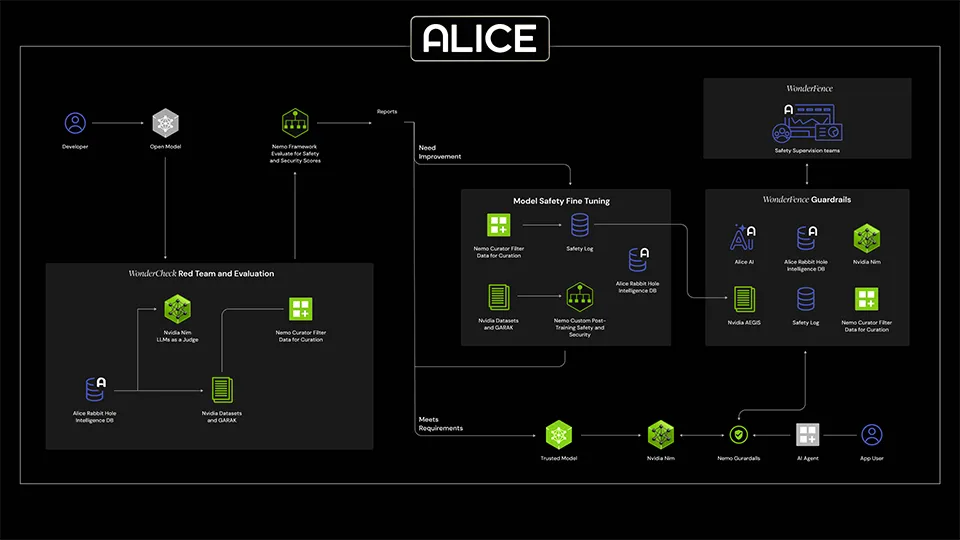

Safety and security for generative AI isn’t a one-time fix. It’s an ongoing process that, like a flywheel, gains momentum and stability with every cycle. That’s why we’re introducing an approach using the NVIDIA AI Safety Recipe for end-to-end AI safety across the entire AI lifecycle. Each cycle of testing, evaluation, and refinement makes AI systems more stable, more adaptive, and better prepared for emerging threats.

Alice + NVIDIA = Safer GenAI

The NVIDIA Enterprise AI Factory validated design is a groundbreaking solution that reimagines compute, storage, and networking layers. This comprehensive technology stack enables enterprises to seamlessly integrate advanced AI capabilities- including agentic AI- into existing IT infrastructure through hardware, software, and a collaborative AIOps ecosystem. Alice is proud to be a member of NVIDIA’s AIOps layer. Together, we ensure AI agents and models are evaluated, fine-tuned, and secured so that enterprise AI systems can operate safely, reliably, and at scale. This collaboration represents more than just technology. It is a shared commitment to building safer AI. By combining Alice's expertise with NVIDIA AI technology, we are helping enterprises deploy agentic AI with confidence, knowing that safety, trust, and brand integrity are embedded at every stage.

What is the AI Safety Flywheel?

Agentic AI applications are built on one or more foundational models. While these models have broad, built-in guardrails, they are not designed to keep agentic applications focused on their intended use or protect the developer’s brand. Through rigorous testing, fine-tuning, and the addition of targeted guardrails, the AI Safety Flywheel transforms an open model into a continuously-improved trusted model.

The process starts in development with automated red teaming and model evaluation for risks like prompt injection, data leakage, and misinformation. Simulated attacks are launched by NVIDIA Garak using NVIDIA-curated datasets and the Rabbit Hole, an evolving resource of real-world threat intelligence gathered in over 110 languages by Alice. An NVIDIA NIM microservice serves as a judge, assigning safety scores that determine pass or fail for each red teaming exercise. The model is then fine-tuned, transformed into a trusted model by retraining on each challenge it fails to stop. Once in production, Alice WonderFence Guardrails blocks dangerous inputs from reaching the model and prevent harmful outputs from reaching the user or AI agent. Similar to red teaming, each interaction with the model is logged and scored, enabling continuous fine-tuning of the model and real-time insights into guardrail performance for safety and security teams.

How Each Solution Fits into the AI Safety Flywheel

Alice and NVIDIA solutions work together to power each stage of the AI Safety Flywheel, enabling the creation, testing, refinement, and deployment of trusted AI models through a combination of red teaming, risk assessments, safety evaluations, filtering, and real-time protections. These solutions include:

- Alice WonderFence: The WonderFence gives safety and security teams real-time insight into guardrail performance via easy-to-understand dashboards.

- The Rabbit Hole: Alice analysts work within the online threat landscape, studying how malicious actors operate and share information. Their findings are stored in what we call the Rabbit Hole, a central resource for insights on emerging threats and risks used in red teaming, model tuning, and guardrail development.

- NVIDIA NIM Microservices: Hosts the trusted model, and acts as a judge when scoring red team exercises and model interactions.

- NVIDIA Garak: Probes the model during red teaming for potential vulnerabilities such as prompt injection, data leakage, misinformation, and other adversarial threats.

- ActiveFence AI: A proprietary, state-of-the-art transformer that powers WonderFence with unmatched speed, accuracy, and cost-efficiency.

- NVIDIA NeMo Curator: Designed for filtering and curating large datasets used in training AI models, the NVIDIA NeMo Curator Filter operates by applying various filtering techniques to raw data, ensuring that only high-quality, relevant, and safe data is used for model training.

- NVIDIA NeMo Framework: Systematically evaluates and scores the overall safety and security of the open model before deployment.

- NVIDIA AI Safety Recipe: Refines and secures AI models after training by applying targeted post-training, safety evaluations, risk mitigations, and compliance checks to ensure safe and trustworthy deployment.

- NVIDIA Nemotron Content Safety Datasets: A comprehensive collection of human-annotated interactions between users and LLMs. This dataset encompasses a broad taxonomy of critical safety risk categories and is instrumental in training and evaluating content safety models.

- NVIDIA NeMo Guardrails: Supported by WonderFence, NeMo Guardrails provide a protective layer that ensures AI models only generate safe, policy-aligned responses in real-time.

Embedding Security from Red Teaming into Real-Time Guardrails

At the heart of Alice's AI Safety Flywheel lies WonderCheck , designed for continuous adversarial testing and evaluation of GenAI systems. WonderCheck supports rigorous testing of multimodal models across text, image, audio, and video, targeting high-risk vectors like jailbreaks, prompt injection, and model extraction. WonderCheck works with NVIDIA Garak and the Rabbit Hole, a living library of global threat intelligence across 110+ languages. With no-code integration, enterprises can evaluate their models across diverse user intents and edge cases. Crucially, performance is benchmarked and tracked over time, supporting a continuous feedback loop of refinement and AI application hardening before and after deployment.

Observability and Control with WonderFence Guardrails

Once in production, real-time protection is enforced by the WonderFence Guardrails, an enterprise-ready observability suite built for scalable oversight. Here, WonderFence Guardrails actively monitors inputs, blocks harmful outputs, and enables teams to track safety incidents across every interaction with their models. Safety teams can visualize risks, drill down into full conversations, and take automated or manual action, all while aggregating analytics across sessions, users, and message flows. Enterprises gain more than visibility. They gain actionable insights and coordinated control over safety operations, even across multiple model vendors and guardrail providers. The result is a closed-loop safety architecture that adapts and evolves in real time, reinforcing every layer of the AI Safety Flywheel.

Why This Matters

Generative AI moves fast, and the stakes are high. Enterprises need to innovate, but they can’t afford to compromise on safety. The AI Safety Flywheel helps teams stay ahead of evolving threats, empowering them to move quickly while protecting users, brands, and platforms from misuse. We took the recipe and made it enterprise-ready. Alice's AI Safety Flywheel isn’t a one-size-fits-all solution - it’s fully customizable to your unique enterprise needs. From evaluation and red teaming to guardrails and ongoing safety monitoring, every layer of the flywheel can be tailored to your policies, use cases, and risk tolerance. This means safer, smarter, and more resilient AI, built for your environment. With ActiveFence and NVIDIA, safety isn’t static. It’s adaptive. It’s proactive. And it’s designed for the pace of modern AI.

Alice Is Setting the Standard for AI Safety and Security

Since the early days of foundation model development, Alice has been at the forefront of AI safety and security, helping foundation model providers and enterprises identify risks, design responsible behaviors, and ensure GenAI is safe for users and brands. Our approach goes beyond technology. ActiveFence’s human analysts embed themselves within risk ecosystems to monitor threat actors, uncover emerging risks, and engage directly with adversarial networks. We’ve seen the worst-case scenarios and know how to prevent them. This real-world intelligence powers every layer of the ActiveFence platform, giving enterprises the confidence they need to deploy AI that is not just powerful, but safe and aligned with their values.

Executive Insights: Navigating Strategic Challenges in GenAI Deployment

Learn moreWhat’s New from Alice

Securing Agentic AI: The OWASP Approach

In this episode, Mo Sadek is joined by Steve Wilson (Chief AI and Product Officer at Exabeam, founder and co-chair of the OWASP GenAI Security Project) to explore how OWASP is shaping practical guidance for agentic AI security. They dig into prompt injection, guardrails, red teaming, and what responsible adoption can look like inside real organizations.

Distilling LLMs into Efficient Transformers for Real-World AI

This technical webinar explores how we distilled the world knowledge of a large language model into a compact, high-performing transformer—balancing safety, latency, and scale. Learn how we combine LLM-based annotations and weight distillation to power real-world AI safety.