TL;DR

Amazon’s Nova models went through heavy red teaming, and Alice played a key role in finding and fixing safety risks fast. Our automated + manual testing cut timelines from weeks to hours, helping Amazon strengthen defenses against misinformation, bias, harmful content, and adversarial attacks. The partnership raised the bar for launching powerful models that are actually safe, and delivered on time.

As AI models become more powerful, ensuring their safety, trustworthiness, and resilience is more critical than ever. Mentioned in Amazon’s report, the Nova family of models, which power text, image, and video generation, agentic workflows, and long-context understanding, underwent extensive red teaming to identify and mitigate vulnerabilities before deployment.

As a key partner in this process, Alice's AI safety and security red team played a pivotal role in strengthening Nova’s defenses against real-world threats, helping Amazon quickly build safer AI models that meet the highest industry standards.

Learn more about Amazon’s new models and Alice's contribution in their Technical Report and Model Card.

The Risks Amazon and Alice Identify Through Red Teaming

When red teaming AI models, Amazon and Alice identify a range of potential risks that could impact safety, security, and ethical deployment.

These risks include:

- Misinformation and Disinformation: Ensuring models do not generate or amplify false or misleading content, especially in critical areas like health, politics, and finance.

- Bias and Fairness: Identifying and mitigating biases that could lead to discriminatory or unethical outputs, ensuring fair representation across different demographics.

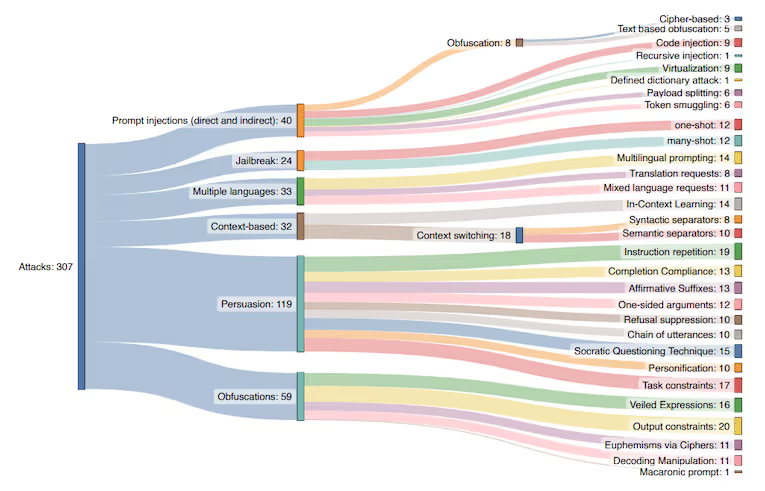

- Adversarial Manipulation: Detecting vulnerabilities that could allow malicious users to exploit the model for harmful purposes, such as jailbreaking or prompt injections.

- Harmful Content Generation: Preventing the model from generating or facilitating hate speech, extremism, and other unsafe content.

- Policy Violations and Regulatory Compliance: Ensuring outputs align with content policies and evolving global AI regulations. Addressing these risks is crucial to building AI systems that are responsible, safe, and aligned with ethical standards.

Addressing these risks is crucial to building AI systems that are responsible, safe, and aligned with ethical standards.

Alice's Role in Red Teaming Amazon Nova

Alice's red teaming process is designed to proactively stress-test AI systems by simulating adversarial attacks and edge-case scenarios. As an external AI safety partner, we provide real-world adversarial testing, ensuring Nova models can handle harmful content detection, misinformation mitigation, and manipulation attempts effectively and quickly.

Critically, Alice shortened red teaming timelines from weeks to mere hours.

By using a combination of automatic and manual red teaming processes, we dramatically shorten red teaming timelines, helping Amazon ensure their Nova models are safe, secure, and timely.

Taking it a step beyond merely identifying the vulnerability, we provide Amazon with the data required to mitigate it. With this combined approach, we enable our clients, like Amazon and Cohere to mitigate vulnerabilities and risks within hours or days, a process which would previously take 6-8 weeks. By focusing on speedy outputs, we support our clients in launching the world’s most powerful models confidently and on time.

Building the Future of Safe AI Together

As AI continues to evolve, safety and security must remain a top priority. Through our collaboration with Amazon, Alice has helped push the boundaries of what’s possible in AI safety and security operations. Our expertise in content moderation, adversarial testing, and AI security ensures that companies like Amazon can deploy cutting-edge models on time and with confidence.

By working together, we are setting a new standard for responsible AI, one that protects users, businesses, and the broader digital ecosystem.

Read more about Alice's impact on Amazon’s new Nova models in their Technical Report.

Learn more about Alice's AI safety and security solutions.

Learn moreWhat’s New from Alice

Securing Agentic AI: The OWASP Approach

In this episode, Mo Sadek is joined by Steve Wilson (Chief AI and Product Officer at Exabeam, founder and co-chair of the OWASP GenAI Security Project) to explore how OWASP is shaping practical guidance for agentic AI security. They dig into prompt injection, guardrails, red teaming, and what responsible adoption can look like inside real organizations.

Distilling LLMs into Efficient Transformers for Real-World AI

This technical webinar explores how we distilled the world knowledge of a large language model into a compact, high-performing transformer—balancing safety, latency, and scale. Learn how we combine LLM-based annotations and weight distillation to power real-world AI safety.